How to Reduce Build Time with Depot: A Step-by-Step Guide

Whatlearned about the company:

Depot Dev

Depot offers cloud-accelerated container builds up to 40 times faster than traditional methods.

impersonates as DevOps Integration Specialist

creates article that:

Whatlearned about the company:

About the company

creates article that:

Results

How to Reduce Build Time with Depot: A Step-by-Step Guide

How to Reduce Build Time with Depot: A Step-by-Step Guide

How to Reduce Build Time with Depot: A Step-by-Step Guide

How to Reduce Build Time with Depot: A Step-by-Step Guide

Turbocharge Your Container Builds with High-Performance CPUs

Building containers can be time-consuming, especially in a fast-paced software development environment. Harnessing the power of high-performance CPUs can revolutionize your containerization process, making it faster and more efficient than you ever thought possible.

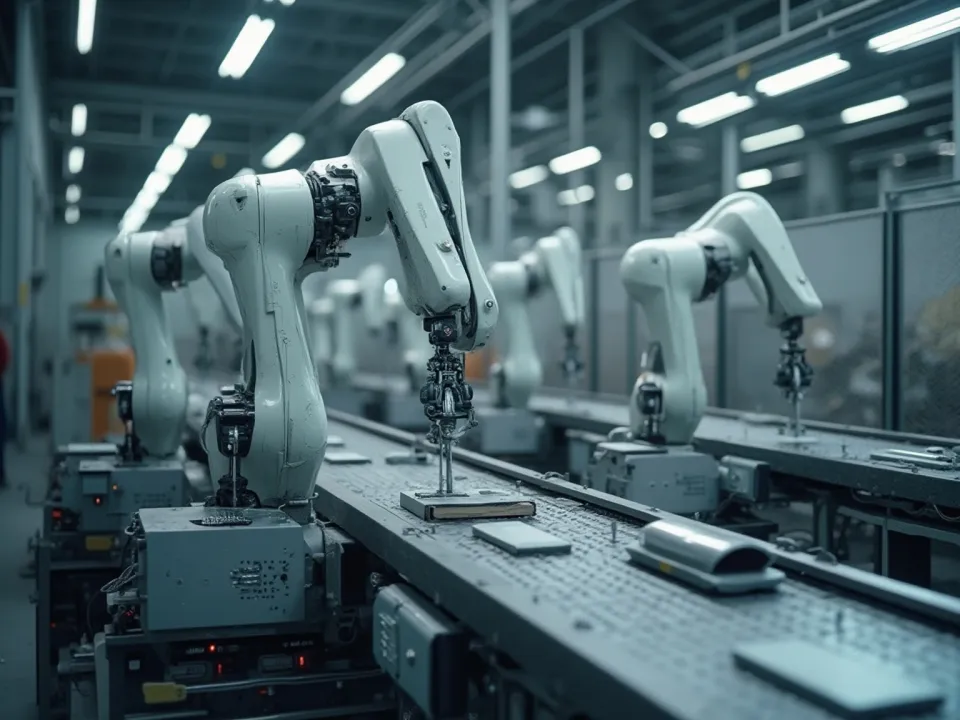

The Rise of Containerization in Modern Software Development

In today's software landscape, containerization has become a game-changer. Containers allow developers to package applications and their dependencies into isolated, portable units that can run consistently across various environments. This has led to a surge in popularity for technologies like Docker and Kubernetes.

Containers offer several advantages. They simplify deployment, enhance scalability, and improve resource utilization. However, as containerization becomes more prevalent, the need for speed and efficiency in building these containers has grown.

Why CPU Performance Matters for Container Builds

CPU performance plays a critical role in the speed and efficiency of container builds and deployments. When we talk about high-performance CPUs, we refer to processors with superior clock speeds, higher core counts, and larger cache sizes. These features directly impact how quickly and efficiently containers can be built and deployed.

High-performance CPUs can handle more instructions per second, reducing the time it takes to compile and build container images. They also manage multitasking better, enabling parallel processing of multiple containers, which is a common scenario in modern DevOps environments.

Key Factors for High-Performance CPUs in Container Workloads

Several factors contribute to making a CPU high-performance for container workloads:

Clock Speed

Clock speed, measured in GHz, determines how many cycles a CPU can execute per second. Higher clock speeds mean faster processing of individual tasks, which is crucial for time-sensitive operations like container builds.

Core Count

A CPU with multiple cores can handle concurrent tasks more effectively. In containerization, where multiple containers are often built and deployed simultaneously, a higher core count ensures smoother multitasking and reduced bottlenecks.

Cache Size

Cache memory acts as a buffer between the CPU and main memory. Larger cache sizes allow frequently accessed data to be stored closer to the CPU, reducing latency and speeding up computations. This is particularly beneficial when building containers, as it involves repetitive tasks and data access.

Case Studies of Businesses Benefiting from High-Performance CPUs

Case Study 1: Tech Innovators Inc.

Tech Innovators Inc. upgraded their infrastructure to include high-performance CPUs. They reported a 40% reduction in container build times, significantly enhancing their development pipeline. This improvement allowed them to release updates and new features faster, giving them a competitive edge.

Case Study 2: DevOps Solutions Ltd.

DevOps Solutions Ltd. faced challenges with slow container builds impacting their CI/CD pipeline. By investing in high-performance CPUs, they saw a 50% increase in build efficiency. Their teams could now run more build jobs in parallel, accelerating their overall development process.

Case Study 3: Cloud Services Corp.

Cloud Services Corp. adopted high-performance CPUs to support their containerized microservices architecture. This upgrade resulted in a 30% improvement in deployment times and better resource allocation. Their customers experienced enhanced performance and reliability in the services offered.

The Future of High-Performance Computing in Containerization

The landscape of high-performance computing is continuously evolving. Emerging technologies and best practices are set to further revolutionize container builds.

Emerging Technologies

Technologies like quantum computing and AI-enhanced processors are on the horizon. These advancements promise even greater processing power and efficiency, paving the way for lightning-fast containerization processes.

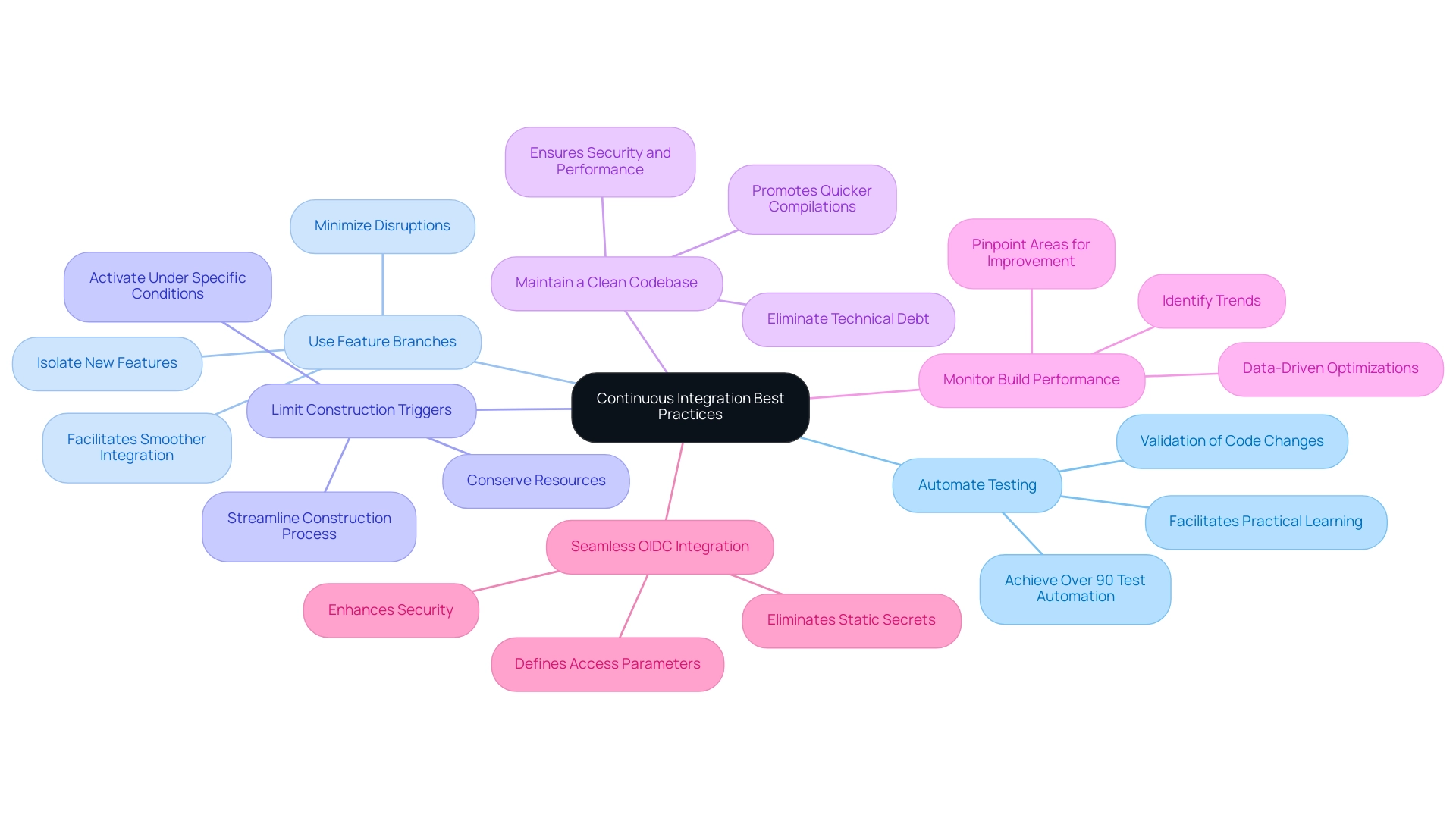

Best Practices

To fully leverage high-performance CPUs, developers and DevOps teams should adopt best practices. This includes optimizing container configurations, utilizing caching mechanisms, and employing parallel processing techniques.

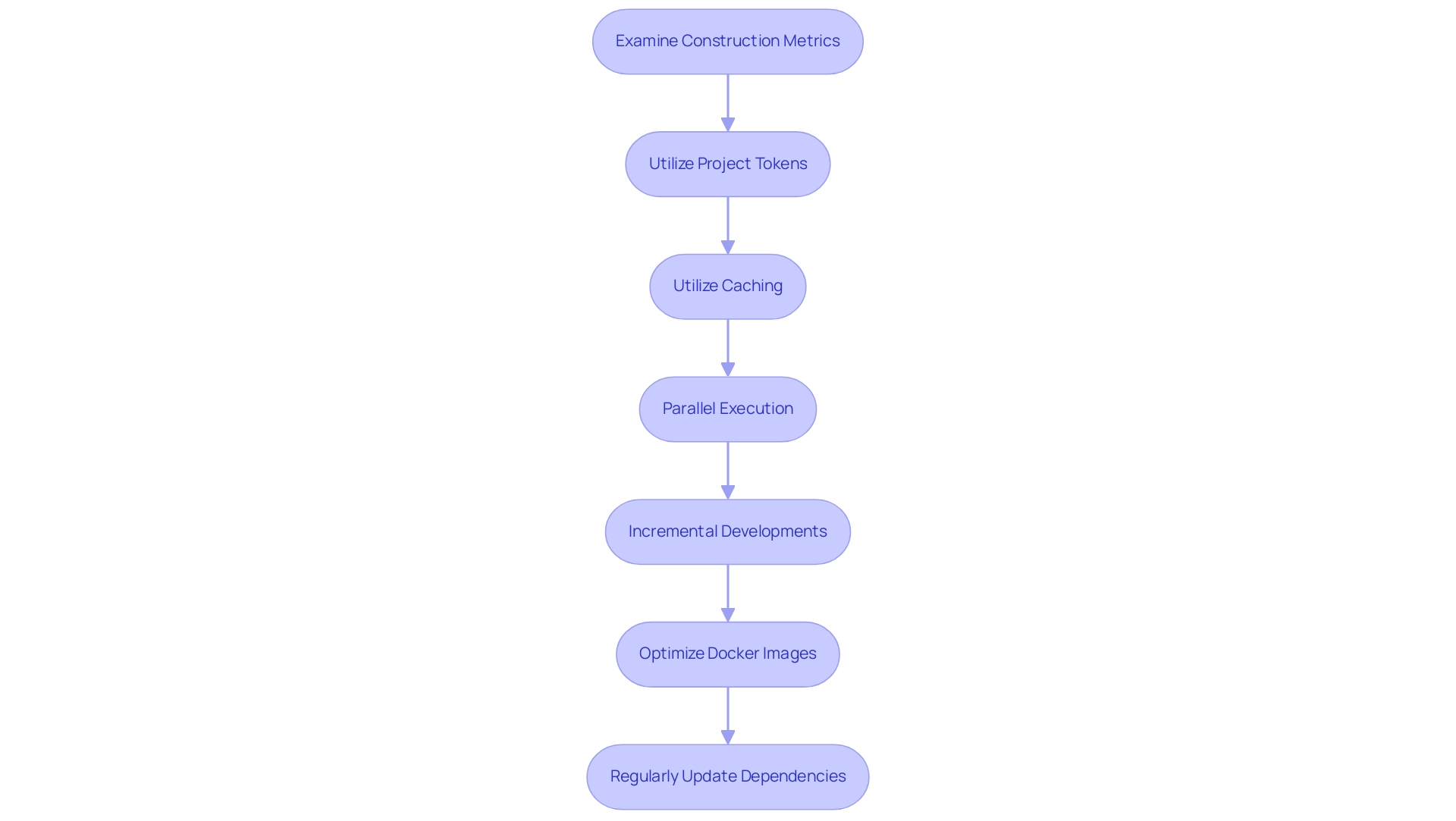

Practical Tips for Optimizing Container Builds

Use Multi-Stage Builds

Multi-stage builds in Docker allow you to create smaller, more efficient container images. By dividing the build process into distinct stages, you can optimize each phase and reduce the overall build time.

Leverage Caching

Implementing proper caching strategies can significantly speed up container builds. Caching dependencies and intermediate layers ensures that only the necessary parts of the build process are re-executed, saving valuable time and resources.

Parallelize Workloads

Distributing container build tasks across multiple CPU cores can enhance performance. Use tools and frameworks that support parallel execution to maximize the benefits of high-performance CPUs.

Investing in High-Performance CPUs Is Crucial for Staying Competitive

In today's fast-paced world, investing in high-performance CPUs is not just an option—it's a necessity. Businesses that prioritize this investment will enjoy faster container builds, streamlined development pipelines, and a competitive advantage in the market.

High-performance CPUs offer significant benefits in terms of speed, efficiency, and scalability. By understanding the importance of CPU performance and adopting best practices, businesses can unlock the full potential of containerization.

Ready to take your container builds to the next level? Start by evaluating your current infrastructure and explore options for upgrading to high-performance CPUs. Your development teams and end-users will thank you for it.

For more insights and personalized advice on optimizing your containerization processes, reach out to our experts today. Let's build a faster, more efficient future together.

How to Reduce Build Time with Depot: A Step-by-Step Guide

How to Reduce Build Time with Depot: A Step-by-Step Guide

Efficiency and Speed in Containerization

Efficiency and speed are at the core of modern containerization practices, driving the need for advanced hardware solutions that can significantly impact the build process. High-performance CPUs stand out as key components in expediting container image creation, enhancing system responsiveness, and elevating the developer's workflow. By harnessing the computational prowess of cutting-edge processors, container builds are executed with remarkable efficiency, leading to swift application delivery and optimized resource management. This acceleration not only boosts productivity but also underscores the importance of leveraging technological advancements for competitive advantage in software development. Recognizing the pivotal role of high-performance CPUs in the container ecosystem is crucial for organizations aiming to maximize the benefits of containerization and achieve operational excellence in today's dynamic IT landscape.

Understanding High-Performance CPUs

Explanation of High-Performance CPUs

In the realm of computing, High-Performance Central Processing Units (CPUs) stand out as the powerhouse of any system. These CPUs are meticulously engineered to tackle intricate computations and swiftly process data. What sets high-performance CPUs apart is their cutting-edge architecture and innovative features that empower them to outperform standard processors by leaps and bounds.

Benefits of High-Performance CPUs in Computing

High-Performance CPUs bring a plethora of benefits to the table, revolutionizing the computing experience. One of the primary advantages is the remarkable enhancement in system speed and responsiveness. This boost in performance makes high-performance CPUs indispensable for resource-intensive tasks like gaming, video editing, and 3D rendering. Moreover, these CPUs excel in multitasking efficiency, enabling users to seamlessly juggle multiple applications concurrently without encountering any performance bottlenecks. The overall result of investing in a high-performance CPU is a computing environment that is not only smoother but also more efficient, paving the way for unparalleled productivity and creativity.

Evolution of High-Performance CPUs

The evolution of high-performance CPUs has been a fascinating journey marked by groundbreaking technological advancements. Over the years, CPU manufacturers have continuously pushed the boundaries of performance, introducing features such as multiple cores, simultaneous multithreading, and advanced cache systems. These innovations have not only elevated processing speeds but have also optimized power efficiency, leading to cooler and more power-efficient systems. Furthermore, the integration of cutting-edge technologies like Artificial Intelligence (AI) and Machine Learning (ML) has further propelled high-performance CPUs into the realm of intelligent computing, enabling tasks that were once deemed impossible to be executed with lightning speed and precision.

Future Trends in High-Performance CPUs

Looking ahead, the future of high-performance CPUs appears to be incredibly promising. With the rise of technologies like quantum computing and neuromorphic engineering, the landscape of CPU design is poised for a paradigm shift. Quantum processors promise unprecedented processing capabilities, while neuromorphic CPUs mimic the human brain's neural networks, opening up new horizons in cognitive computing. As we venture into this era of exponential technological growth, high-performance CPUs will continue to be at the forefront of innovation, driving the next wave of transformative advancements in computing.

High-performance CPUs represent the pinnacle of computational excellence, offering unparalleled speed, efficiency, and versatility. As these processors continue to evolve and redefine the boundaries of computing, they remain indispensable tools for unlocking the full potential of modern technology.

Impact of High-Performance CPUs on Container Builds

Speed and Efficiency Improvements

High-performance CPUs have revolutionized the landscape of container builds by bringing about significant speed and efficiency improvements. The processing power of advanced CPUs enables developers to experience faster build and deployment times, ultimately leading to accelerated development cycles and reduced time-to-market for applications. With the ability to handle complex tasks more swiftly, high-performance CPUs empower developers to focus on innovation and creativity rather than being bogged down by lengthy build processes.

Resource Utilization Optimization

Optimizing resource utilization is another critical advantage offered by high-performance CPUs in container builds. By leveraging faster CPUs, containers can be built and executed more efficiently, making optimal use of available resources such as memory and storage. This enhanced resource utilization not only boosts the overall performance of containerized applications but also contributes to cost savings by maximizing resource efficiency. Organizations leveraging high-performance CPUs can achieve heightened scalability and performance in their containerized environments, fostering increased productivity and competitiveness in today's dynamic digital realm.

Enhanced Compatibility and Versatility

Apart from speed and resource optimization, high-performance CPUs also enhance compatibility and versatility in container builds. These CPUs are capable of seamlessly handling a diverse range of workloads, ensuring smooth operation across various applications and environments. The compatibility and versatility offered by high-performance CPUs enable developers to build and deploy containers with greater ease and flexibility, accommodating evolving business needs and technological requirements.

Future-Proofing Containerized Environments

Investing in high-performance CPUs for container builds not only yields immediate benefits but also future-proofs containerized environments. As technologies continue to advance, having robust CPUs ensures that organizations can adapt to emerging trends and demands without compromising on performance or efficiency. By future-proofing their containerized infrastructure with high-performance CPUs, businesses can stay ahead of the curve and remain competitive in an ever-evolving digital landscape.

Cost-Efficiency and ROI

High-performance CPUs not only enhance speed and efficiency but also contribute to cost-efficiency and a positive return on investment (ROI) for organizations. By reducing build times and improving resource utilization, businesses can lower operational costs associated with container builds. The accelerated development cycles facilitated by high-performance CPUs translate into quicker time-to-market for applications, allowing companies to capitalize on market opportunities faster and generate revenue sooner. Additionally, the enhanced performance and scalability achieved through high-performance CPUs lead to improved operational efficiency and a higher ROI over time.

Security and Reliability

The impact of high-performance CPUs on container builds extends to security and reliability aspects as well. Advanced CPUs with robust processing capabilities enhance the security posture of containerized applications by enabling faster encryption and decryption processes, strengthening data protection measures. Moreover, the reliability of container builds is bolstered by high-performance CPUs, ensuring consistent performance and stability across diverse workloads and environments. By investing in secure and reliable CPU technologies, organizations can mitigate risks associated with container vulnerabilities and maintain the integrity of their applications.

Innovation and Competitive Advantage

High-performance CPUs drive innovation and provide a competitive advantage to businesses operating in containerized environments. The speed, efficiency, and versatility offered by advanced CPUs empower developers to explore new possibilities and experiment with cutting-edge technologies, fostering a culture of innovation within organizations. By leveraging high-performance CPUs, businesses can differentiate themselves in the market by delivering high-performance, scalable, and secure containerized solutions that meet the evolving needs of customers and stakeholders. This focus on innovation and differentiation enables companies to stay ahead of competitors and establish themselves as industry leaders in the rapidly evolving digital landscape.

Environmental Sustainability

In addition to the technical benefits, high-performance CPUs contribute to environmental sustainability by optimizing resource usage and reducing energy consumption in container builds. By executing tasks more efficiently and maximizing resource utilization, advanced CPUs help minimize the carbon footprint associated with data centers and IT infrastructure. The energy-efficient design of high-performance CPUs not only lowers operational costs for businesses but also aligns with sustainability goals, promoting eco-friendly practices in the digital ecosystem. By adopting high-performance CPUs for container builds, organizations can support environmental conservation efforts while enhancing the performance and efficiency of their IT operations.

Collaboration and Team Productivity

The impact of high-performance CPUs extends beyond individual developers to promote collaboration and enhance team productivity in containerized environments. By streamlining build processes and accelerating deployment times, advanced CPUs facilitate seamless collaboration among team members working on container projects. The improved efficiency and performance of container builds driven by high-performance CPUs enable teams to iterate quickly, share resources effectively, and collaborate on complex tasks with ease. This collaborative environment not only boosts productivity but also fosters a culture of teamwork and knowledge sharing, leading to enhanced project outcomes and organizational success.

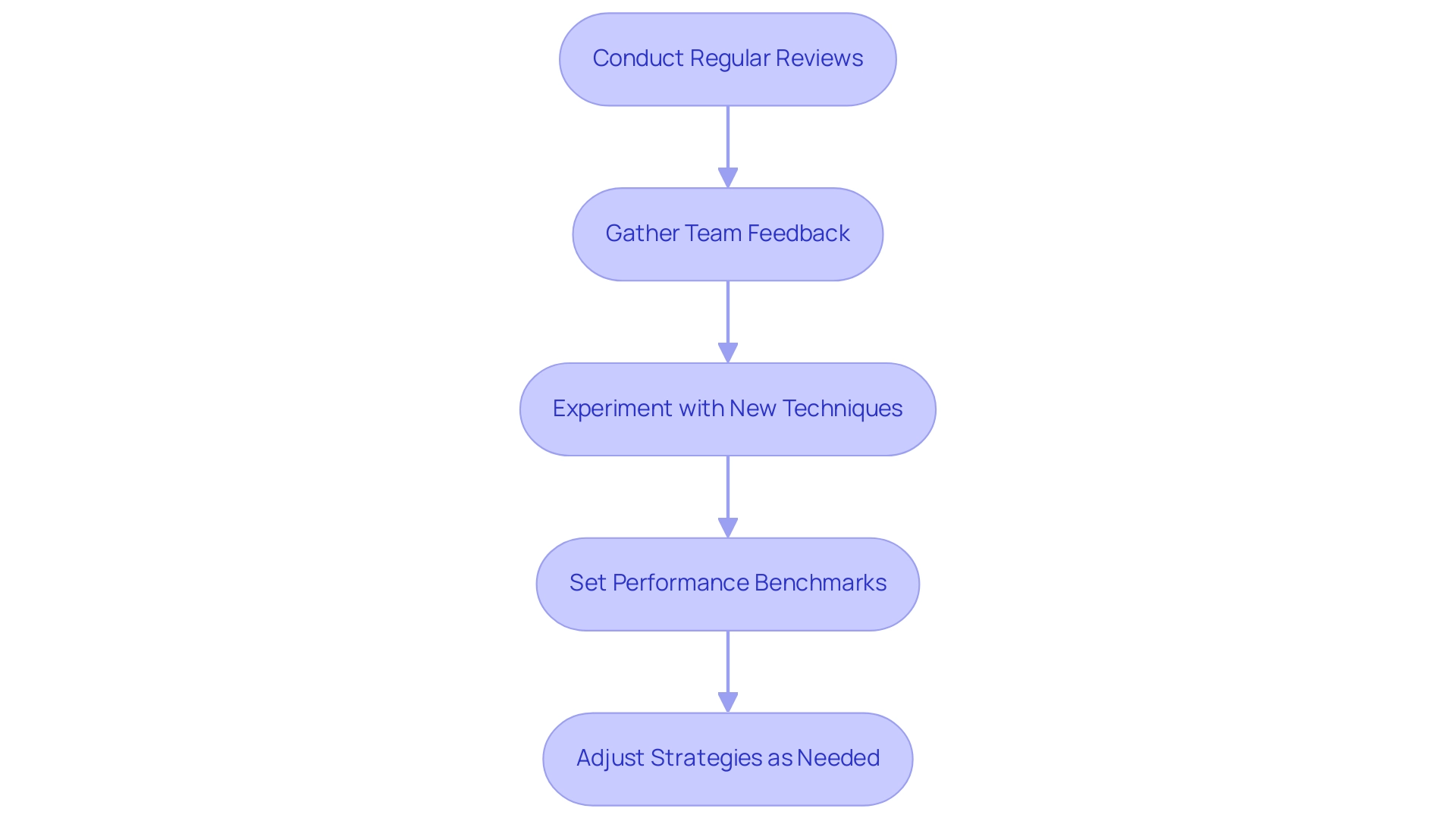

Continuous Improvement and Adaptability

High-performance CPUs play a crucial role in enabling continuous improvement and adaptability in containerized environments. The processing power and scalability of advanced CPUs empower organizations to evolve their container builds in response to changing requirements and technological advancements. By leveraging high-performance CPUs, businesses can implement iterative improvements, optimize resource allocation, and adapt to dynamic market conditions with agility. The adaptability and flexibility offered by high-performance CPUs ensure that containerized environments remain resilient and responsive to evolving business needs, positioning organizations for sustained growth and innovation in the digital age.

Conclusion

The impact of high-performance CPUs on container builds is multifaceted and far-reaching, encompassing speed and efficiency improvements, resource utilization optimization, enhanced compatibility and versatility, future-proofing of environments, cost-efficiency and ROI, security and reliability enhancements, innovation and competitive advantage, environmental sustainability, collaboration and team productivity, as well as continuous improvement and adaptability. By harnessing the power of high-performance CPUs, organizations can unlock a myriad of benefits that not only enhance the performance and efficiency of containerized applications but also drive innovation, competitiveness, and sustainability in today's fast-paced digital landscape. Investing in high-performance CPUs is not just a technological upgrade; it is a strategic decision that empowers businesses to thrive in a rapidly changing world where speed, agility, and reliability are paramount.

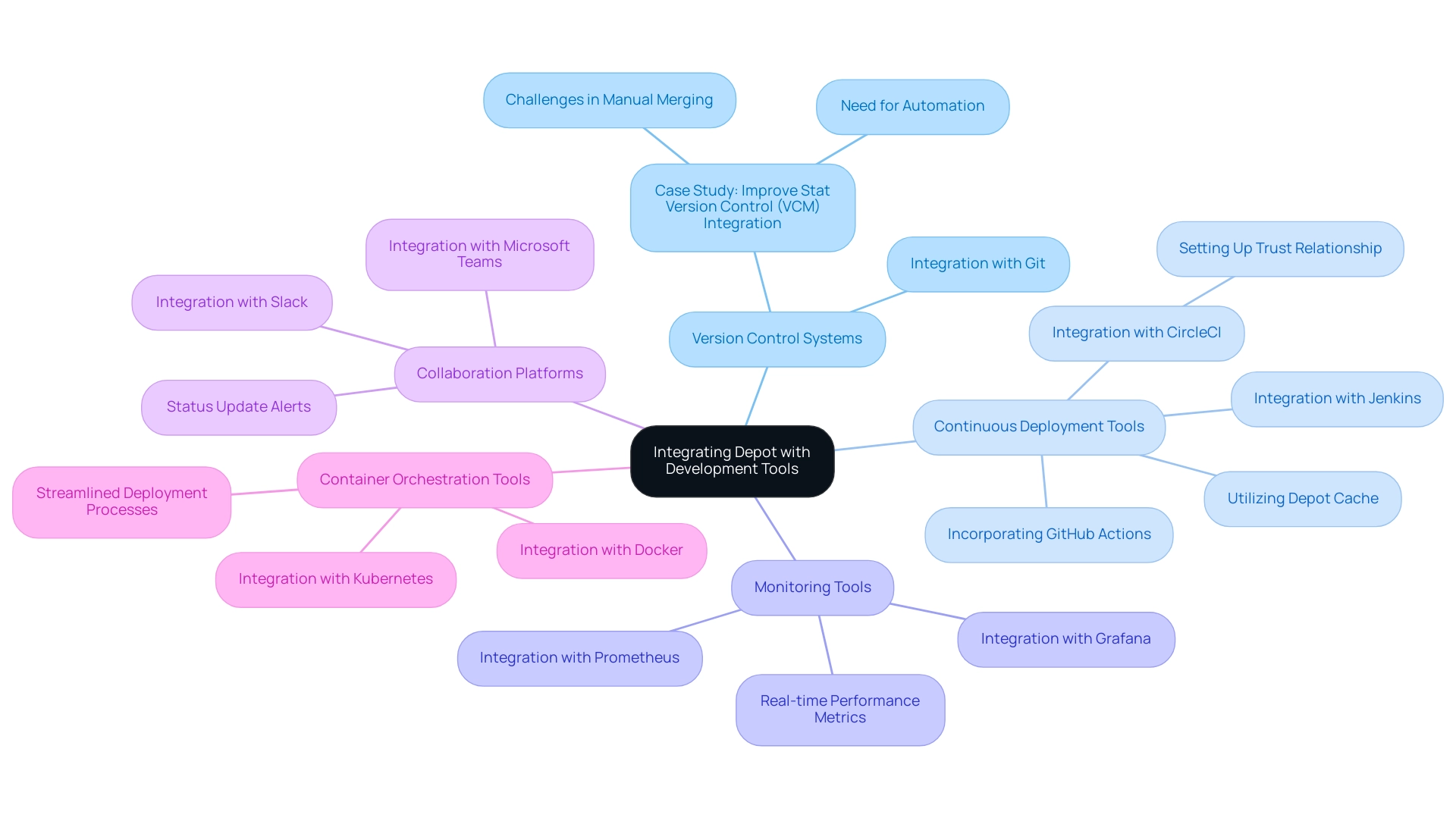

Real-World Applications

Enhancing Container Build Processes with High-Performance CPUs

In this segment, we delve into the transformative role that high-performance CPUs play in enhancing container build processes. By leveraging cutting-edge processors, organizations can significantly accelerate containerization, boost operational efficiency, and slash build times. The utilization of advanced CPUs enables seamless orchestration of containers, leading to streamlined deployment pipelines and enhanced scalability.

Exploring Case Studies

This section offers a deep dive into real-world case studies that exemplify the practical applications of high-performance CPUs. Through a detailed analysis of diverse industry examples, we showcase the concrete advantages and outcomes derived from the adoption of state-of-the-art processors. From accelerated software development cycles to optimized resource utilization, these case studies underscore the profound impact of advanced CPUs on driving innovation and competitiveness.

Revolutionizing Performance

Beyond speed and efficiency gains, high-performance CPUs are revolutionizing the landscape of containerized applications. By harnessing the computational prowess of modern processors, organizations can achieve unprecedented levels of performance optimization and resource utilization. The synergy between powerful CPUs and container technologies empowers businesses to meet the demands of dynamic workloads, enhance application portability, and scale infrastructure with agility.

Future Implications

Looking ahead, the integration of high-performance CPUs into container environments is poised to shape the future of software development and deployment. As advancements in processor technology continue to evolve, the possibilities for accelerating container build processes and driving innovation are limitless. By staying at the forefront of CPU innovation, organizations can unlock new opportunities for efficiency, scalability, and competitiveness in the ever-evolving landscape of containerization.

Emerging Trends in CPU Technology

The realm of CPU technology is witnessing rapid advancements, with a focus on enhancing performance, energy efficiency, and scalability. From multi-core processors to specialized accelerators like GPUs and TPUs, the market is brimming with diverse options for organizations seeking to optimize their containerized workloads. Understanding the latest trends in CPU architecture and design is crucial for businesses aiming to stay competitive and leverage cutting-edge technologies for improved container orchestration.

Security Considerations and Compliance

As high-performance CPUs become integral to container environments, ensuring robust security measures and regulatory compliance is paramount. Organizations must address potential vulnerabilities associated with CPU architectures and implement stringent security protocols to safeguard sensitive data and applications. By prioritizing security best practices and adhering to industry regulations, businesses can mitigate risks and build a secure foundation for their containerized deployments.

Scalability and Resource Management

The scalability of containerized applications hinges on efficient resource management facilitated by high-performance CPUs. Dynamic workload demands require agile resource allocation and optimization strategies to maintain optimal performance levels. Through intelligent resource provisioning, organizations can achieve seamless scalability, minimize operational costs, and enhance overall system reliability. High-performance CPUs play a pivotal role in enabling flexible resource scaling and ensuring consistent application performance across varying workloads.

Innovative Use Cases and Industry Disruption

High-performance CPUs are driving innovation across diverse industries, revolutionizing traditional processes, and unlocking new possibilities for application development and deployment. From AI-driven workloads in healthcare to real-time analytics in finance, the adoption of advanced processors is reshaping business operations and fueling digital transformation. By exploring innovative use cases and disruptive applications powered by high-performance CPUs, organizations can gain insights into emerging trends, best practices, and strategic opportunities for leveraging cutting-edge technologies in their containerized environments.

Future Trends

Advancements in CPU Technology for Containerization

In this section, we will delve into the cutting-edge advancements in CPU technology specifically tailored for containerization. The evolution of CPUs, from traditional single-core processors to modern multi-core architectures, has significantly impacted the efficiency and scalability of containerized applications. We will explore how features like hardware virtualization support, improved cache management, and enhanced instruction sets are optimizing the performance of containers in cloud-native environments.

Predictions for the Role of High-Performance CPUs

As we gaze into the future, high-performance CPUs are poised to play a pivotal role in reshaping the landscape of computing. The relentless pursuit of faster processing speeds and computational power is driving the development of CPUs with advanced capabilities such as quantum computing, neuromorphic engineering, and integrated AI accelerators. We will analyze how these innovations are expected to revolutionize industries like healthcare, finance, and autonomous systems, unlocking new frontiers in data processing, machine learning, and real-time analytics.

Emerging Trends in CPU Architecture

Furthermore, we will examine the emerging trends in CPU architecture that are set to redefine the boundaries of performance and efficiency. Concepts like heterogeneous computing, where CPUs are integrated with specialized co-processors like GPUs and FPGAs, are enabling unprecedented levels of parallelism and workload acceleration. We will also discuss the rise of energy-efficient processors designed for edge computing and IoT applications, highlighting the importance of power optimization and thermal management in next-generation CPU designs.

The Impact of CPUs on Cloud Computing

Lastly, we will assess the profound impact of CPUs on the evolution of cloud computing and data center infrastructure. With the shift towards serverless computing and microservices architectures, the demand for CPUs that can handle diverse workloads with minimal latency and overhead is higher than ever. We will explore how innovations in CPU technology, such as dynamic frequency scaling, hardware security features, and memory hierarchy enhancements, are driving the scalability and reliability of cloud-based services, paving the way for a more interconnected and intelligent digital ecosystem.

Future Challenges and Opportunities

Looking ahead, the future of CPU technology presents both challenges and opportunities. One of the key challenges is to overcome the limitations imposed by the physical constraints of silicon-based processors, prompting researchers to explore alternative materials and novel architectures. Quantum computing, in particular, holds the promise of exponentially faster computations by leveraging quantum bits or qubits. We will delve into the potential of quantum CPUs in revolutionizing complex simulations, cryptography, and optimization problems, ushering in a new era of computing capabilities.

Moreover, the proliferation of edge computing and IoT devices is driving the demand for ultra-low power CPUs that can deliver high performance while conserving energy. Innovations in neuromorphic computing, inspired by the human brain's neural networks, are paving the way for energy-efficient CPUs capable of learning and adapting to dynamic environments. We will discuss how these advancements are fueling the development of autonomous systems, smart infrastructure, and personalized computing experiences, creating a more interconnected and intelligent world.

Ethical and Societal Implications

As CPUs become more powerful and pervasive in everyday life, ethical considerations surrounding data privacy, algorithmic bias, and autonomous decision-making come to the forefront. The responsible development and deployment of high-performance CPUs require robust ethical frameworks, transparent algorithms, and accountability mechanisms to ensure fair and unbiased outcomes. We will explore the ethical dilemmas posed by AI-driven CPUs, the implications of autonomous decision-making in critical systems, and the societal impact of ubiquitous computing technologies on privacy, security, and human autonomy.

Conclusion

The future trends in CPU technology are poised to revolutionize the way we compute, communicate, and interact with the digital world. From advancements in containerization and high-performance computing to emerging architectures and cloud-native innovations, CPUs are at the forefront of driving technological progress and shaping the future of computing. By embracing the challenges, opportunities, and ethical considerations inherent in the evolution of CPU technology, we can pave the way for a more sustainable, intelligent, and inclusive digital future.

Conclusion

High-performance CPUs play a crucial role in accelerating container builds by significantly reducing processing times and improving overall efficiency. As technology continues to advance, investing in high-performance CPUs will become increasingly essential for organizations looking to streamline their development processes and stay competitive in the rapidly evolving tech landscape. By harnessing the power of these advanced processors, businesses can achieve faster build times, enhanced performance, and ultimately, deliver better products to their customers.

Search engines index & rank higher Tely’s expert-level articles

For each article we use at least 30 sources to add cases, report data, quotes and infographics.

Tely AI uses real world information to position your company as an industry expert

Tely AI generates infographics to capture visitors attention

Tely inserts quotes and expert opinions of niche industry leaders

Tely paraphrases all texts to sound human and bypass AI content detectors

As we reach 2023, a GPT-4-based model has solved 84.3% of problems, nearing the human baseline of 90%. As we continue to push AI's limits in mathematics, we are compelled to create new benchmarks to highlight the differences and advantages between human and AI problem-solving.

In a test involving 30 problems from the International Mathematical Olympiad, AlphaGeometry was able to solve 25. This performance significantly surpasses previous methods, which could only solve 10 problems.

The ability of AI to process and analyze massive data sets has the potential to revolutionize the methodologies and problem-solving approaches used in mathematics.

“Machine learning tools are very good at recognizing patterns and analyzing very complex problems.”

Consider the “ai math” problem, a complex mathematical challenge that has been made more manageable through AI's capabilities. This not only saves considerable time but also opens new avenues for innovative mathematical research.

.webp)